Last Update 21 Oct 24

The Latest on Nvidia's NVLM 1.0

Nvidia has recently unveiled NVLM 1.0, a family of open-source multimodal large language models (MLLMs) designed to compete with leading proprietary systems like OpenAI's GPT-4 and open-source models such as Meta's Llama 3.1. The flagship model, NVLM-D-72B, boasts 72 billion parameters and demonstrates similar performance to both proprietary and open-source competitors across both vision and language tasks.

For those of you who are a bit more technologically inclined, there are a few key features that make NVLM quite interesting:

- The model offers three architectural options - Decoder-only, Cross-attention, and Hybrid models - to optimize for different tasks, improving both training efficiency and multimodal reasoning.

- Nvidia introduced a novel 1-D "tile-tagging" system for efficient handling of high-resolution images. The result here should be far greater performance on tasks requiring detailed image analysis.

- Interestingly, unlike many multimodal models that see a decline in text performance after multi-modal training, Nvidia’s NVLM-D-72B task accuracy in text-only applications is improved by an average of 4.3 points across key benchmarks.

- Nvidia is committed to an open source model. Nvidia is releasing the model weights and plans to provide the training code in Megatron-Core, which should encourage community collaboration and improvements. Although it’s not fully open source, as there isn’t full transparency on training data, it’s a great step to generate engagement within the community.

My Latest Thoughts

The release of NVLM 1.0 is a significant development that was previously unaccounted for in my narrative, but it comes as a welcome surprise. Although it’s come a bit from left field, I think it still aligns well with my original narrative and could be an important new catalyst for Nvidia's future growth.

Although I’m not very savvy when it comes to LLMs, the fact one of the NVLM 1.0 models shows improved text-only performance over its LLM backbone after multimodal training is an impressive achievement. While I don’t think this is going to be anything disruptive, it could position Nvidia's models as quite favourable in multimodal applications and perhaps appealing to a broader range of use cases compared to LLMs from competitors..

The open-source nature of NVLM 1.0, while not fully disclosing the training data, is a strategic move. By releasing the model weights and planning to make the training code available, Nvidia is encouraging widespread adoption and collaboration. This openness allows researchers and developers to build upon the model, leading to rapid improvements and optimizations. The more the community engages with NVLM 1.0, the more its efficacy is likely to increase.

From a business perspective, this strategy could create a positive feedback loop for Nvidia. The company doesn't need NVLM 1.0 to be the absolute best model on the market; it needs sufficient adoption to drive more GPU sales. I think of it like Nvidia attempting to create a software and hardware ecosystem in the way Apple has. As more people use the model, the demand for Nvidia GPUs could increase, especially if users aim to run these models locally or develop their own variations. Essentially, Nvidia is positioning itself to benefit from both the hardware and software sides of AI development—much like "owning the mine and the shop that sells the pickaxes."

This development could be Nvidia's first step toward a business plan that involves creating consumer AI-optimized GPUs, empowering individuals and smaller organizations to create and run their own models. While Nvidia’s current consumer GPU lineup has a variety of applications in both gaming and workstation loads, the bulk of the consumer sales are for gaming purposes. But Nvidia has the opportunity to augment their current GPU lineup or launch a new branch of GPUs that are more focussed on machine learning and running AI models locally. If successful, this market could potentially rival the size of the gaming GPU market. While this is a possibility and not yet a probability, it aligns well with some of my existing catalysts and almost blends two of my catalysts together.

Ultimately, I think these latest developments on NVLM 1.0 not only support my existing narrative, but enhance it by introducing a new avenue for growth for Nvidia. In some respects, it mitigates some risks related to increased competition by building up a larger ecosystem centered around Nvidia's technology. While I believe this move further solidifies Nvidia's position on top of the game, I won’t yet include it in my valuation. I strongly believe this has the potential to change the game for how and why consumers use GPUs, but I want to see what paths Nvidia look to head down before assigning value to this catalyst.

Key Takeaways

- Nvidia is poised to capture a larger share of the growing Data Centre market thanks to class leading hardware supporting the most demanding applications

- Nvidia's hardware is the best on market for AI applications and greater AI implementation will mean greater the demand for Nvidia hardware.

- Nvidia's DRIVE autonomous vehicle platform opens the door for Nvidia to flourish in a $300 Billion market.

- Nvidia's lead in the consumer GPU segment will be unassailable , with competitors needing to catch up in both hardware and software implementation.

Catalysts

Generative AI And Machine Learning Dominance Keeps Drives Data Center Hardware Sales Amidst A Push For Sustainable Computing.

It seems we’ve reached an inflection point for generative AI where companies no longer see it as something that is “Too expensive to adopt” and rather something as “Too expensive not to adopt”. As AI and machine learning applications become increasingly prevalent across industries, the need for more advanced hardware infrastructure grows at both a user and enterprise level. This is where Nvidia steps in.

Nvidia's GPUs are widely used in AI and machine learning applications, such as autonomous vehicles, robotics, and natural language processing. The company's CUDA platform has become the industry standard for AI and machine learning, attracting numerous researchers and developers. Nvidia has created itself some sort of positive feedback loop where their architecture supports the development of new AI applications, and new AI applications develop specifically for Nvidia architecture as it’s the most advanced. As AI and machine learning continue to grow, demand for Nvidia's GPUs is expected to remain strong.

Nvidia has solidified itself as an authority in ‘Generative AI’, a subsection of artificial intelligence which can produce images, text and other media through written prompts. Generative AI first took-off with mainstream users with the spike in popularity of ChatGPT a large language model (LLMs) that can generate text information in response to users' prompts. Since then, almost every large company has adopted AI in some capacity. From AI models like Meta’s LLaMA, to even supercomputers like Tesla’s Dojo (which I’ve covered in another narrative of mine), these companies have one thing in common. They rely on Nvidia’s hardware.

In fact, Nvidia has formed a partnership with Meta, where the social media giant plans to purchase around 350,000 H100 GPUs and nearly 600,000 H100 compute-equivalent GPUs by the end of 2024, giving a little insight into the massive demand and trust in Nvidia's AI capabilities.

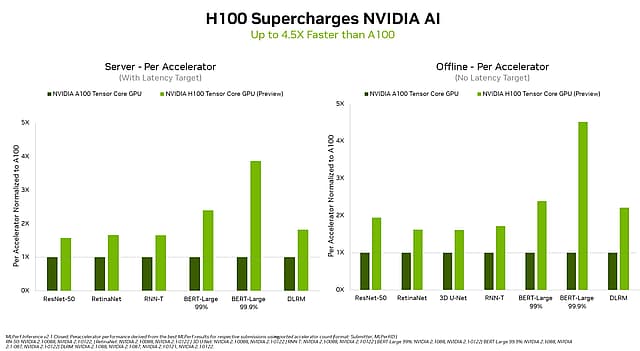

Nvidia: Breaking Records In Machine Learning Performance

Nvidia’s Hopper GPU architecture is already the gold standard when it comes to accelerated computing, but Nvidia endeavors to widen its already unassailable lead while addressing a key concern. The biggest limitation in improving AI is the amount of computational power on hand for training and running AI models.

According to the World Economic Forum, the computational power required for sustaining AI's rise is doubling roughly every 100 days. To achieve a tenfold improvement in AI model efficiency, the computational power demand could surge by up to 10,000 times. Now, this issue is great for Nvidia, as the solution is more GPUs. But we must reflect on what more GPUs actually means. It means energy requirements are accelerating rapidly, which flies in the face of a lot of sustainability principles.

The energy requirements to support the current AI landscape in its infancy is already accelerating, with annual growth rates for energy consumption between 26% and 36%. A paper written by Schneider Electricposits that, AI could be using more power than the entire country of Iceland used in 2021.

To combat this, Nvidia revealed the NVIDIA Blackwell platform, which promises to deliver generative AI at up to 25x less cost and energy consumption than the NVIDIA Hopper architecture. Nvidia’s lead in the hardware race affords them the ability to now strive for efficiency over raw compute power, which further increases its competitive advantage. Not only is Nvidia hardware more powerful than competitors in AI workloads, it does so at a lower cost.

I believe Nvidia’s push for sustainability will further solidify their market share dominance in the data center hardware segment.

Nvidia’s Stranglehold On The Gaming Market Will Continue To Drive Consumer Hardware Sales.

Nvidia is a leading player in the gaming industry, thanks to its GeForce GPUs. If you take a look at Steam’s Hardware & Software Survey for April 2024, you can begin to get a picture of how dominant Nvidia is in the GPU space, particularly among gamers and enthusiasts. Nvidia cards hold the top 19 positions by ownership share for all Steam users and - perhaps more impressively - Nvidia occupies 33 of the top 40 GPUs based on these hardware ownership stats.

With the gaming market expected to grow in the coming years after already overtaking both Hollywood and the music industry COMBINED, Nvidia's dominance in the consumer GPU market will likely continue to bring solid sales growth. Forecasts pin the global gaming market size at US$545.98 Billion in 2028 and according to studies conducted in 2019, 91% of males in generation-Z play video games regularly, compared to 84% of millennials which is a remarkably high figure. The worldwide youth unemployment rate has also been gradually declining, which has increased purchasing power and sped up consumption of video games in recent years.

Next-gen video game engines like Epic Game’s Unreal Engine 5 are now being supported by Nvidia with their RTX software suite. Video game developers are now able to implement Nvidia technologies using the Nvidia NvRTX branches integrated into Unreal Engine, this helps cut down on development time while also improving the graphics. Aside from the obvious kickbacks Nvidia will receive from licensing their RTX suite, there's one other massive benefit that this catalyst will bring and that is gamers who are wanting to have the best experience in graphical fidelity and performance with these upcoming games will be needing Nvidia GPUs to do it.

Although the sales of Nvidia’s 4000 series were somewhat subdued - in part due to economic reasons, in part due to the marginal improvements over the previous 3000 series - they’re likely to announce a new line of consumer GPUs. Although it’s all just rumors now, it’s likely that Nvidia will release a 5000 series of GPUs towards the end of 2024/beginning of 2025 that make use of the Blackwell architecture. If these GPUs release with even a marginal performance improvement over the current 4000 series, then it’s likely it’d bury all competition from AMD and Intel as it would allow Nvidia to drop the price of the current gen cards to compete from both a price and a performance angle.

Nvidia Data Centers Will Be The Backbone Of The Internet’s Future.

Data centers are the lifeblood of the digital age, and Nvidia is at the forefront of this rapidly expanding industry. Nvidia's data center division, boosted by the surge in AI and high-performance computing (HPC), has seen impressive growth in the past few years. The company's high-end data center GPUs like the H200, are the cornerstone for many supercomputers and cloud-based services around the globe.

The trend towards AI-accelerated applications, coupled with the need for faster processing power, has made Nvidia's GPUs essential to the backbone of the data center industry. With more data being generated and processed than ever before, organizations across the globe are looking towards Nvidia’s high-performance computing solutions to help manage, analyze, and store these vast amounts of data effectively and efficiently.

Nvidia's DGX systems are also becoming the go-to solution for AI training and inference in the cloud. These are designed as fully-integrated hardware and software stacks, delivering previously unheard of compute power. Nvidia's DGX GH200 and H100 are like supercomputers in a box, that are designed to handle even the most robust AI workloads. This hardware handles the processing power behind generative AI models and LLMs greater than anyone else, which is a huge selling point and explains why the data center segment is Nvidia’s best performer in the last 12 months and will likely be well into the future.

Nvidia Omniverse Could Disrupt Current Approaches To Manufacturing

Nvidia's Omniverse platform is a powerful real-time simulation and collaboration platform for 3D design workflows. With the growing demand for 3D content creation, virtual production, and remote collaboration, there’s a fairly large void that Nvidia is attempting to fill here.

Nvidia Omniverse bridges the gap between reality and the digital applications that drive company workflows, helping augment 3D workflows across a variety of platforms.

Mercedes-Benz announced that it is using the NVIDIA Omniverse platform to design and plan manufacturing and assembly facilities. Planners can access the digital twin of the factory, reviewing and optimizing the plant as needed at a relatively low financial cost and low planning overhead cost.

It’s not just legacy automakers who are seeing the benefits of implementing the Omniverse platform, with relative newcomer BYD, the world's largest electric vehicle maker, is adopting Omniverse for virtual factory planning and retail configurations.

It’s not just innovation for the sake of innovation either, with Wistron, one of Nvidia’s manufacturing partners, reducing end-to-end production cycle times by 50% and defect rates by 40% thanks to implementing Nvidia’s Omniverse into their processes.

While the ProViz segment is still in its infancy, increasing generative AI capabilities could be a catalyst that drives adoption as customers can turn to AI to assist with their creation and visualization needs, reducing the labor while delivering a much more engaging experience.i The ProViz segment already delivered 45% year-on-year growth over the last 12 months and I believe that this could increase handily if customers continue seeing the level of efficiency improvements that Wistron has seen.

Assumptions

Data Center To Be The Business' Core Growth Driver

The growing demand for AI and accelerated computing leads to unprecedented demand for Nvidia’s data center architecture. Advancing AI applications means greater computational requirements which Nvidia is able to service in a one-stop-shop way, owing to their 3 chip strategy. Nvidia maintains a leadership position that is head and shoulders above competitors.

According to IDC, the worldwide spending of enterprises and service providers on hardware, software, and services for edge computing solutions - just one small part of Data Center applications - was projected to reach $274 billion in 2025. Furthermore, Prescient & Strategic Intelligence says that the entire Data Center market was worth $301 Billion in 2023 and could double to be worth $605 Billion per year in revenue by 2030. However, I believe both of these estimates are underselling growth.

Nvidia Data Centre Segment Growth - Nvidia Earnings

Nvidia’s Data Center segment growth has been astronomical over the previous few quarters, and while a fair amount will be attributable to Nvidia rapidly gaining market share, I believe the previous estimates didn’t account for the boom in AI. Based on the estimates above and Nvidia’s previous revenue performance, I estimate their market share at roughly 15%. Looking forward, I estimate that the entire Data Center market could be worth $900 Billion a year in 2028, of which I expect Nvidia to capture an even greater proportion of this at 20%. I am forecasting that deeper penetration amongst enterprise customers aided by AI demand could result in Nvidia Data Center revenue to reach $180 Billion by 2029.

Expansion Of The Gaming Market Is A Wave Nvidia Will Ride

I believe Gaming revenue grows slightly but at a lower rate than before. The general expansion of the gaming market to a $545B powerhouse will naturally see benefit flow through to Nvidia. I predict Nvidia will still have a healthy lead in the consumer GPU market, but not as powerful as it is now.

Nvidia’s 30 series was a massive hit, but we’ve seen the latest 40 series hit less than stellar sales figures with the older GPUs still being incredibly popular. AMD and perhaps Intel will begin stalking down Nvidia’s market share lead as their own AI-driven GPU software helps close the performance gap, but future Nvidia product releases could hamper AMD and Intel in gaining ground. An optimistic estimate of gaming revenues would see gaming grow at 8% pa. Less than the growth over the previous 5 years, but still a healthy boost. I forecast revenues of $19.86 Billion in 2029, up from $13.52 Billion over the previous 12 months.

Pro Viz Adoption Will Be Relatively Subdued

In this case, I’ll assume Pro Viz adoption to be negligible. Nvidia’s bet on their Omniverse (the Nvidia ‘metaverse’) is a fairly low-risk, high-reward bet on AR adoption becoming more commonplace in the workplace. Although performance has been handy with a 45% YoY increase in quarterly revenues, I don’t particularly see the segment meaningfully take off in the near term. I’ll use a steady 4% growth to use in all valuation scenarios to encapsulate minor customer expansion. I forecast 2029 revenues of $2.05 Billion

Immense Opportunities In The Automotive Segment

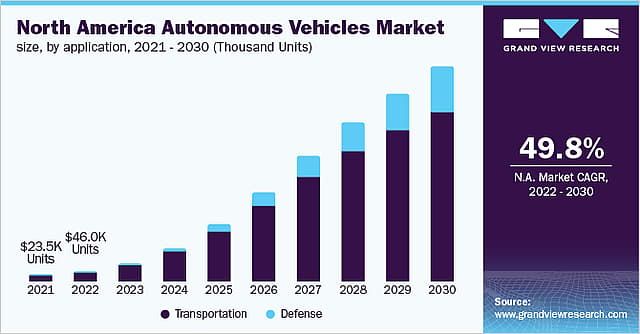

In a previous investor presentation, Nvidia detail their combined businesses participate in a market with over $1T in opportunity, with the automotive opportunity being the equal largest at $300B. While I don’t believe the automotive segment will ever be as large as the data center segment, I do think it’s the part of Nvidia that has the most growth potential. Considering Nvidia’s automotive segment is the smallest by revenue, but is supposedly the equal largest by opportunity, the company itself thinks that this could be a valuable growth pathway.

Grand View Research: Autonomous Vehicle Market Growth in North America

Nvidia’s historical automotive revenues have been driven by infotainment, but this is set to change as Nvidia begins to dedicate resources towards NVIDIA DRIVE, their autonomous vehicle and AI cockpit platform. Nvidia DRIVE already has some important design wins under its name, with Chinese automakers BYD, XPeng and GAC expected to have Nvidia’s DRIVE Thor in their vehicles in 2025. Nvidia anticipates an $11B design win pipeline through to FY28 based on DRIVE Orin, which started ramp in FY23. In my optimistic forecast, I’ll estimate that Nvidia realizes 60% of that design win pipeline, resulting in $7B in revenue in 2028. Grossed up to a 5-year estimate, I believe that the Automotive segment will continue growing at this 50% growth rate to 2029, seeing $10.5 Billion in revenue in 5 years time.

Net Margin To Plateau As Competition Ramps Up

Previously, I had anticipated gross and net margins to stagnate after seeing a minor drop in FY23. I was very wrong on that, as margins began to climb again to a ridiculous new height. I understand right now, margins are elevated due to red-hot demand and a lack of competition, two factors that I think will change over the next few years. I still anticipate a hot market for data center hardware and Nvidia to have an advantage over competitors, but I expect the magnitude of both of these vectors to taper off. Likewise, I could also foresee leaner margins as ramp up costs rise of new architectures, but this will be offset by later operational improvements and reductions in costs (both supply chain driven and reduced R&D expenditure) in the later half of the 5 years. I’ll use a Net Profit Margin of 40% to forecast future earnings. This is lower than the current 53.4%, but it’s also higher than the long run average which had bounced around the 20–30% mark.

Immense Earnings Growth Supports High Price-to-Earnings Multiple

Given the earnings and revenue growth established in this scenario, I feel that the company could be trading at around a 60x P/E ratio. This is slightly below the current PE ratio of 65.8x, and is still very elevated compared to peers, but I think the earnings growth could support it, particularly if Nvidia maintains an appreciable competitive advantage in the hardware space. My forecasted earnings growth isn’t as high as the historical growth rate over the last few years, but I’m sure the market understands that this isn’t sustainable and was merely a “flash-in-the-pan” type scenario. In my mind, the higher price multiple will be supported my Nvidia’s strengthening within the market, rather than pure growth rates.

Risks

Increased Competition In The GPU Space Could See Market Share Decline

Nvidia faces competition from companies like AMD, Intel, and other hardware manufacturers. Intel has recently debuted their first discrete GPU product in the Arc A700 GPU series which could be a formidable competitor in the near future. Increased competition could lead to pricing pressures and reduced market share for Nvidia.

Consumer GPU Pricing Strategy Could Alienate Buyers

In the midst of a cost of living crisis, Nvidia’s high-priced flagship consumer cards are quite a lot to stomach for most enthusiasts. While I understand the flagship cards aren’t the cards most consumers would be buying, they represent the biggest improvement on a generational basis and are generally an indicator of how a new GPU generation will be received. If the flagship cards are priced too highly, it could act as a deterrent for consumers upgrading if the increase in performance doesn’t justify the costs. I believe this could reverberate down the product line to even the mid-to-lower end cards. If the flagship cards aren’t priced well, then in all likelihood, the mid-to-lower end cards won’t be priced in a way that’d prompt consumers to upgrade either.

Regulatory Hurdles Could Prevent Acquisitions And Stifle Growth

Nvidia's previously proposed acquisition of Arm Limited was under scrutiny from various regulatory bodies. The plans for acquisition were promptly cancelled in February 2022 due to the regulatory challenges, with Arm Limited having completed its own IPO in late 2023. Future acquisitions could be at risk from similar regulatory scrutiny, which could see acquisition-driven growth as something out of reach for Nvidia.

Supply Chain Disruptions Could Impact Future Hardware Sales

The global semiconductor industry has experienced supply chain disruptions due to various factors, including trade restrictions and the COVID-19 pandemic. Further disruptions that are a direct fallout from the issues mentioned or new disruptions as a result of macroeconomic changes could impact Nvidia's ability to meet demand for its products and thus possibly lose ground to competitors that have inventory on hand.

Have other thoughts on NVIDIA?

Create your own narrative on this stock, and estimate its Fair Value using our Valuator tool.

Create NarrativeHow well do narratives help inform your perspective?

Disclaimer

Simply Wall St analyst Bailey holds no position in NasdaqGS:NVDA. Simply Wall St has no position in the company(s) mentioned. Simply Wall St may provide the securities issuer or related entities with website advertising services for a fee, on an arm's length basis. These relationships have no impact on the way we conduct our business, the content we host, or how our content is served to users. This narrative is general in nature and explores scenarios and estimates created by the author. The narrative does not reflect the opinions of Simply Wall St, and the views expressed are the opinion of the author alone, acting on their own behalf. These scenarios are not indicative of the company's future performance and are exploratory in the ideas they cover. The fair value estimate's are estimations only, and does not constitute a recommendation to buy or sell any stock, and they do not take account of your objectives, or your financial situation. Note that the author's analysis may not factor in the latest price-sensitive company announcements or qualitative material.